Are we walking into an AI tragedy?

While Pope Francis insists that science should be at the service of humanity, ethical concerns seem to be an afterthought in the race to capture the AI market

This Lent, please consider making a donation to Aleteia.

Silicon Valley moves forward by following three rather straightforward steps: try things, see how they fail, try again. In more ways than one, it is but another example of the typical modern scientific-empirical method. And whereas this way of doing things “has brought us some incredibly cool consumer technology and fun websites” – as Kelsey Piper rightly puts it – AI is an entirely different game: It is no longer about coolness or fun, but about “robust engineering for reliability.”

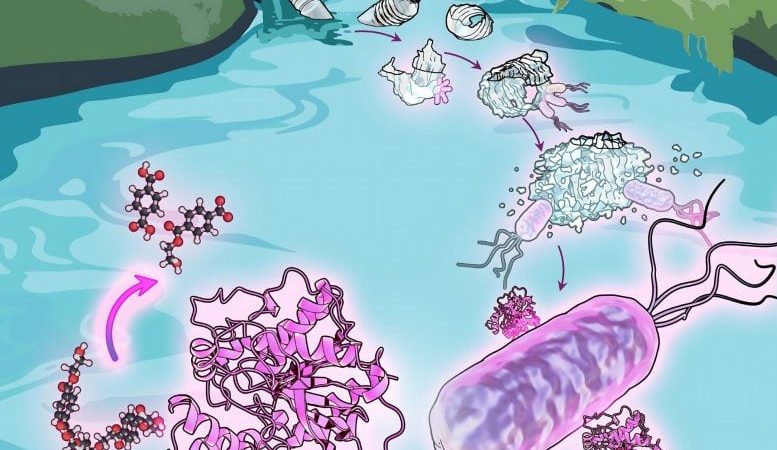

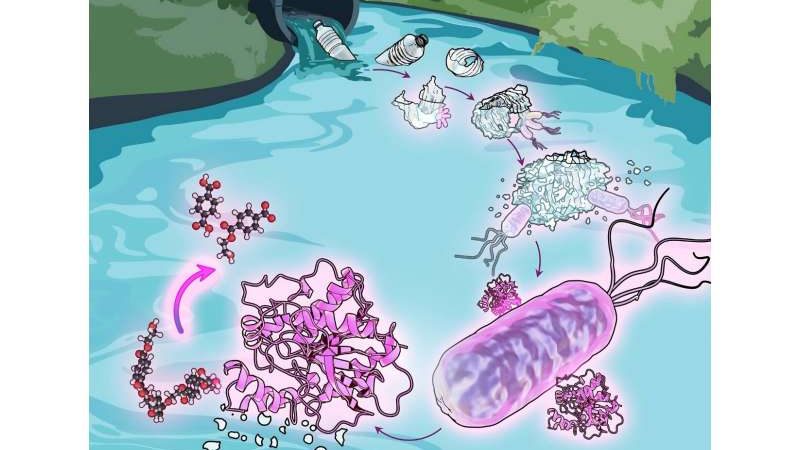

In a recently published interview with Time, DeepMind founder Demis Hassabis urged caution. DeepMind is a subsidiary of Google’s parent company, Alphabet, and one of the world’s leading artificial intelligence labs. One of its algorithms, AlphaFold, “had predicted the 3D structures of nearly all the proteins known to humanity, and […] the company was [is] making the technology behind it freely available,” the interview reads. Even more, “AlphaFold has already been a force multiplier for hundreds of thousands of scientists working on efforts such as developing malaria vaccines, fighting antibiotic resistance, and tackling plastic pollution.”

But with great power also comes great danger. In the same interview, Hassabis explained that “in recent months, researchers building an AI system to design new drugs revealed that their tool could be easily repurposed to make deadly new chemicals.”

“I would advocate,” Hassabis told Time, “not moving fast and breaking things.” Hassabis was referring to an old Facebook motto that became the classic M.O. of the company, encouraging engineers to focus on releasing new technological developments as soon as they were ready to be launched, and worry about solving the problems they might cause later.

This appetite for disruption certainly helped Facebook to reach 3 billion users, but “it also left the company entirely unprepared when disinformation, hate speech, and even incitement to genocide began appearing on its platform.”

The main problem, Piper explains, is that tech “is often a winner-takes-all sector.” Even if there are plenty of different search engines, Google alone controls more than 90% of its market. But companies like Microsoft and Baidu now have “a once-in-a-lifetime shot at displacing Google and becoming the internet search giant with AI-enabled, friendlier interface.” And whereas there is nothing inherently wrong with this sort of competition, it is self-evident that “when it comes to very powerful technologies — and obviously AI is going to be one of the most powerful ever — we need to be careful,” Hassabis insists. “Not everybody is thinking about those things [i.e., ethical concerns]. It’s like experimentalists, many of whom don’t realize they’re holding dangerous material.” Competition can certainly be great, but it can also be threatening. As some analysts have warned, “we could stumble into an AI catastrophe.”

Maintaining the standards of science

This is one of the Max Planck Society’s main concerns. The Max Planck Society for the Advancement of Science is a formally independent, non-governmental and non-profit association of German research institutes. Founded in 1911 as the Kaiser Wilhelm Society, it was renamed the Max Planck Society in 1948 in honor of its former president, theoretical physicist Max Planck.

In his address to the Society, Sophie Peeters’ note explains, “Pope Francis highlighted the esteem of the Holy See for scientific research and, more specifically, for the work of the Max Planck Society in their commitment to the advancement of sciences and progress in their specific areas of research.”

But, perhaps more importantly, the Pontiff also encouraged the Society to maintain standards of “pure science” – that is, scientific research that is not driven by either political prejudices or by sheer economic interest.

However, the perils science faces today, according to Pope Francis, are not just political or economic: the Pontiff has repeatedly insisted that priority has been given to sheer “technical” concerns, leaving no room for ethical reflection associated to technological research or scientific developments. When functionality is given precedence over what is ethically licit, caring for others (which, the Pope recalls, is the priority of any human activity) is no longer on the horizon.

AI: enormous development, and an equally enormous tragedy

In concluding its general assembly on emerging technologies last February, the Pontifical Academy for Life expressed concern about technological innovations that could “destroy everything.”

During a press conference, Archbishop Vincenzo Paglia, president of the Academy, called for an “international table” to regulate these new technologies.

These new “convergent technologies” (nanotechnology, biotechnology, computer science, and cognitive science) Paglia warned, can bring “enormous development” but also “an equally enormous tragedy,” comparing them to nuclear power and stressing the perils of having algorithms deciding human affairs: “Technology without ethics is a very dangerous thing […] we need to refer to moral values and principles in order to have criteria for evaluation.”