Effective Altruism’s Bait-and-Switch: From Global Poverty To AI Doomerism

from the come-for-the-ending-global-poverty,-stay-for-the-ai-doomerism dept

The Effective Altruism movement

Effective Altruism (EA) is typically explained as a philosophy that encourages individuals to do the “most good” with their resources (money, skills). Its “effective giving” aspect was marketed as evidence-based charities serving the global poor. The Effective Altruism philosophy was formally crystallized as a social movement with the launch of the Centre for Effective Altruism (CEA) in February 2012 by Toby Ord, Will MacAskill, Nick Beckstead, and Michelle Hutchinson. Two other organizations, “Giving What We Can” (GWWC) and “80,000 Hours,” were brought under CEA’s umbrella, and the movement became officially known as Effective Altruism.

Effective Altruists (EAs) were praised in the media as “charity nerds” looking to maximize the number of “lives saved” per dollar spent, with initiatives like providing anti-malarial bed nets in sub-Saharan Africa.

If this movement sounds familiar to you, it’s thanks to Sam Bankman-Fried (SBF). With FTX, Bankman-Fried was attempting to fulfill what William MacAskill taught him about Effective Altruism: “Earn to give.” In November 2023, SBF was convicted of seven fraud charges (stealing $10 billion from customers and investors). In March 2024, SBF was sentenced to 25 years in prison. Since SBF was one of the largest Effective Altruism donors, public perception of this movement has declined due to his fraudulent behavior. It turned out that the “Earn to give” concept was susceptible to the “Ends justify the means” mentality.

In 2016, the main funder of Effective Altruism, Open Philanthropy, designated “AI Safety” a priority area, and the leading EA organization 80,000 Hours declared artificial intelligence (AI) existential risk (x-risk) is the world’s most pressing problem. It looked like a major shift in focus and was portrayed as a “mission drift.” It wasn’t.

What looked to outsiders – in the general public, academia, media, and politics – as a “sudden embrace of AI x-risk” was a misconception. The confusion existed because many people were unaware that Effective Altruism has always been devoted to this agenda.

Effective Altruism’s “brand management”

Since its inception, Effective Altruism has been obsessed with the existential risk (x-risk) posed by artificial intelligence. As the Effective Altruism movement’s leaders recognized it could be perceived as “confusing for non-EAs,” they decided to get donations and recruit new members for different causes like poverty and “sending money to Africa.”

When the movement was still small, they planned the bait-and-switch tactics in plain sight (in old forum discussions).

A dissertation by Mollie Gleiberman methodically analyzes the distinction between the “public-facing EA” and the inward-facing “core EA.” Among the study findings: “From the beginning, EA discourse operated on two levels, one for the general public and new recruits (focused on global poverty) and one for the core EA community (focused on the transhumanist agenda articulated by Nick Bostrom, Eliezer Yudkowsky, and others, centered on AI-safety/x-risk).”

“EA’s key intellectual architects were all directly or peripherally involved in transhumanism, and the global poverty angle was merely a stepping stone to rationalize the progression from a non-controversial goal (saving lives in poor countries) to transhumanism’s far more radical aim,” explains Gleiberman. It was part of their “brand management” strategy to conceal the latter.

The public-facing discourse of “giving to the poor” (in popular media and books) was a mirage designed to get people into the movement and then lead them to the “core EA,” x-risk, which is discussed in inward-facing spaces. The guidance was to promote the publicly-facing cause and keep quiet about the core cause. Influential Effective Altruists explicitly wrote that this was the best way to grow the movement.

In public-facing/grassroots EA, the target recipients of donations, typically understood to be GiveWell’s top recommendations, are causes like AMF – Against Malaria Foundation. “Here, the beneficiaries of EA donations are disadvantaged people in the poorest countries of the world,” says Gleiberman. “In stark contrast to this, the target recipients of donations in core EA are the EAs themselves. Philanthropic donations that support privileged students at elite universities in the US and UK are suddenly no longer one of the worst forms of charity but one of the best. Rather than living frugally (giving up a vacation/a restaurant) so as to have more money to donate to AMF, providing such perks is now understood as essential for the well-being and productivity of the EAs, since they are working to protect the entire future of humanity.”

“We should be kind of quiet about it in public-facing spaces”

Let the evidence speak for itself. The following quotes are from three community forums where Effective Altruists converse with each other: Felicifia (inactive since 2014), LessWrong, and EA Forum.

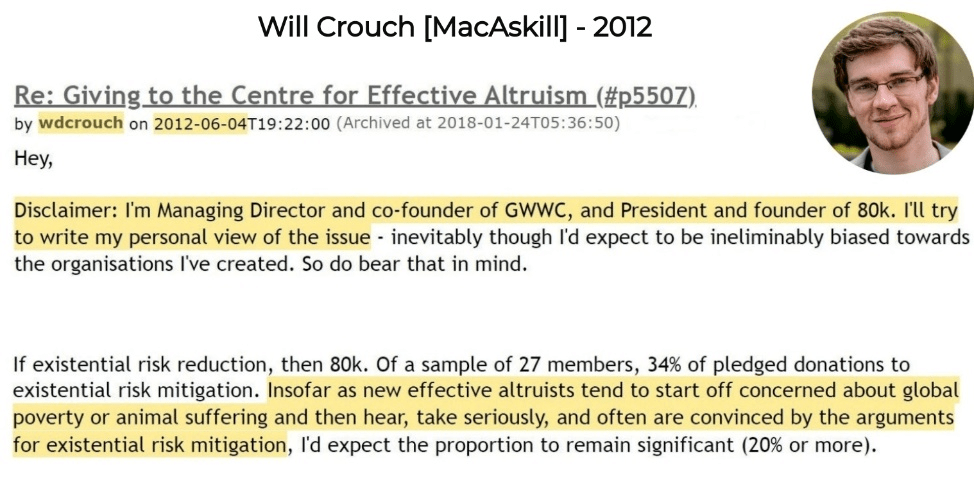

On June 4, 2012, Will Crouch (it was before he changed his last name to MacAskill) had already pointed out (on the Felicifia forum) that “new effective altruists tend to start off concerned about global poverty or animal suffering and then hear, take seriously, and often are convinced by the arguments for existential risk mitigation.”

On November 10, 2012, Will Crouch (MacAskill) wrote on the LessWrong forum that “it seems fairly common for people who start thinking about effective altruism to ultimately think that x-risk mitigation is one of or the most important cause area.” In the same message, he also argued that “it’s still a good thing to save someone’s life in the developing world,” however, “of course, if you take the arguments about x-risk seriously, then alleviating global poverty is dwarfedby existential risk mitigation.”

In 2011, a leader of the EA movement, an influential GWWC leader/CEA affiliate, who used a “utilitymonster“ username on the Felicifia forum, had a discussion with a high-school student about the “High Impact Career” (HIC, later rebranded to 80,000 Hours). The high schooler wrote: “But HIC always seems to talk about things in terms of ‘lives saved,’ I’ve never heard them mentioning other things to donate to.” Utilitymonster replied: “That’s exactly the right thing for HIC to do. Talk about ‘lives saved’ with their public face, let hardcore members hear about x-risk, and then, in the future, if some excellent x-risk opportunity arises, direct resources to x-risk.”

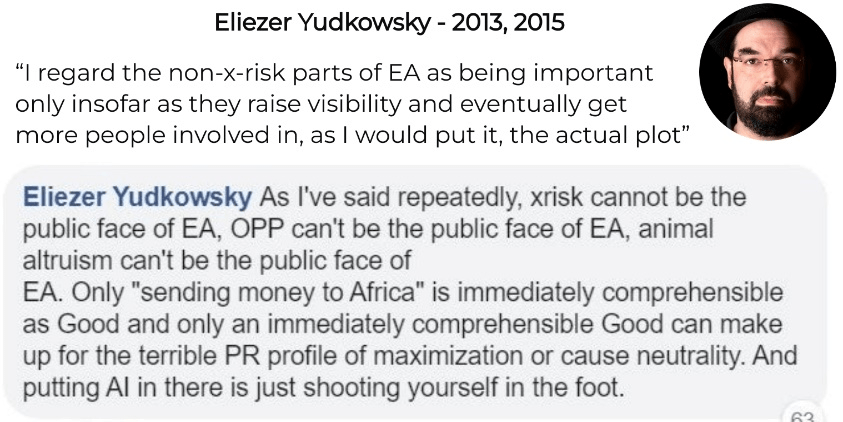

Another influential figure, Eliezer Yudkowsky, wrote on LessWrong in 2013: “I regard the non-x-risk parts of EA as being important only insofar as they raise visibility and eventually get more people involved in, as I would put it, the actual plot.”

As a comment to a Robert Wiblin post in 2015, Eliezer Yudkowsky clarified: “As I’ve said repeatedly, xrisk cannot be the public face of EA, OPP [OpenPhil] can’t be the public face of EA. Only ‘sending money to Africa’ is immediately comprehensible as Good and only an immediately comprehensible Good can make up for the terrible PR profile of maximization or cause neutrality. And putting AI in there is just shooting yourself in the foot.”

Rob Bensinger, the research communications manager at MIRI (and prominent EA movement member), argued in 2016 for a middle approach: “In fairness to the ‘MIRI is bad PR for EA’ perspective, I’ve seen MIRI’s cofounder (Eliezer Yudkowsky) make the argument himself that things like malaria nets should be the public face of EA, not AI risk. Though I’m not sure I agree […]. If we were optimizing for having the right ‘public face’ I think we’d be talking more about things that are in between malaria nets and AI […] like biosecurity and macroeconomic policy reform.”

Scott Alexander (Siskind) is the author of the influential rationalist blog “Slate Star Codex” and “Astral Codex Ten.” In 2015, he acknowledged that he supports the AI-safety/x-risk cause area, but believes Effective Altruists should not mention it in public-facing material: “Existential risk isn’t the most useful public face for effective altruism – everyone including Eliezer Yudkowsky agrees about that.” In the same year, 2015, he also wrote: “Several people have recently argued that the effective altruist movement should distance itself from AI risk and other far-future causes lest it make them seem weird and turn off potential recruits. Even proponents of AI risk charities like myself agree that we should be kind of quiet about it in public-facing spaces.”

In 2014, Peter Wildeford (then Hurford) published a conversation about “EA Marketing” with EA communications specialist Michael Bitton. Peter Wildeford is the co-founder and co-CEO of Rethink Priorities and Chief Advisory Executive at IAPS (Institute for AI Policy and Strategy). The following segment was about why most people will not be real Effective Altruists (EAs):

“Things in the ea community could be a turn-off to some people. While the connection to utilitarianism is ok, things like cryonics, transhumanism, insect suffering, AGI, eugenics, whole brain emulation, suffering subroutines, the cost-effectiveness of having kids, polyamory, intelligence-enhancing drugs, the ethics of terraforming, bioterrorism, nanotechnology, synthetic biology, mindhacking, etc. might not appeal well.

There’s a chance that people might accept the more mainstream global poverty angle, but be turned off by other aspects of EA. Bitton is unsure whether this is meant to be a reason for de-emphasizing these other aspects of the movement. Obviously, we want to attract more people, but also people that are more EA.”

“Longtermism is a bad ‘on-ramp’ to EA,” wrote a community member on the Effective Altruism Forum. “AI safety is new and complicated, making it more likely that people […] find the focus on AI risks to be cult-like (potentially causing them to never get involved with EA in the first place).”

Jan Kulveit, who leads the European Summer Program on Rationality (ESPR), shared on Facebook in 2018: “I became an EA in 2016, and it the time, while a lot of the ‘outward-facing’ materials were about global poverty etc., with notes about AI safety or far future at much less prominent places. I wanted to discover what is the actual cutting-edge thought, went to EAGx Oxford and my impression was the core people from the movement mostly thought far future is the most promising area, and xrisk/AI safety interventions are top priority. I was quite happy with that […] However, I was somewhat at unease that there was this discrepancy between a lot of outward-facing content and what the core actually thinks. With some exaggeration, it felt like the communication structure is somewhat resembling a conspiracy or a church, where the outward-facing ideas are easily digestible, like anti-malaria nets, but as you get deeper, you discover very different ideas.”

Prominent EA community member and blogger Ozy Brennan summarized this discrepancy in 2017: “A lot of introductory effective altruism material uses global poverty examples, even articles which were written by people I know perfectly fucking well only donate to MIRI.”

As Effective Altruists engaged more deeply with the movement, they were encouraged to shift to AI x-risk.

“My perception is that many x-risk people have been clear from the start that they view the rest of EA merely as a recruitment tool to get people interested in the concept and then convert them to Xrisk causes.” (Alasdair Pearce, 2015).

“I used to work for an organization in EA, and I am still quite active in the community. 1 – I’ve heard people say things like, ‘Sure, we say that effective altruism is about global poverty, but — wink, nod — that’s just what we do to get people in the door so that we can convert them to helping out with AI/animal suffering/(insert weird cause here).’ This disturbs me.” (Anonymous#23, 2017).

“In my time as a community builder […] I saw the downsides of this. […] Concerns that the EA community is doing a bait-and-switch tactic of ‘come to us for resources on how to do good. Actually, the answer is this thing and we knew all along and were just pretending to be open to your thing.’ […] Personally feeling uncomfortable because it seemed to me that my 80,000 Hours career coach had a hidden agenda to push me to work on AI rather than anything else.” (weeatquince [Sam Hilton], 2020).

Austin Chen, the co-founder of Manifold Markets, wrote on the Effective Altruism Forum in 2020: “On one hand, basically all the smart EA people I trust seem to be into longtermism; it seems well-argued and I feel a vague obligation to join in too. On the other, the argument for near-term evidence-based interventions like AMF [Against Malaria Foundation] is what got me […] into EA in the first place.”

In 2019, EA Hub published a guide: “Tips to help your conversation go well.” Among the tips like “Highlight the process of EA” and “Use the person’s interest,” there was “Preventing ‘Bait and Switch.’” The post acknowledged that “many leaders of EA organizations are most focused on community building and the long-term future than animal advocacy and global poverty.” Therefore, to avoid the perception of a bait-and-switch, it is recommended to mention AI x-risk at some point:

“It is likely easier, and possibly more compelling, to talk about cause areas that are more widely understood and cared about, such as global poverty and animal welfare. However, mentioning only one or two less controversial causes might be misleading, e.g. a person could become interested through evidence-based effective global poverty interventions, and feel misled at an EA event mostly discussing highly speculative research into a cause area they don’t understand or care about. This can feel like a “bait and switch”—they are baited with something they care about and then the conversation is switched to another area. One way of reducing this tension is to ensure you mention a wide range of global issues that EAs are interested in, even if you spend more time on one issue.”

Oliver Habryka is influential in EA as a fund manager for the LTFF, a grantmaker for the Survival and Flourishing Fund, and leading the LessWrong/ Lightcone Infrastructure team. He claimed that the only reason EA should continue supporting non-longtermist efforts is to preserve the public’s perception of the movement:

“To be clear, my primary reason for why EA shouldn’t entirely focus on longtermism is because that would to some degree violate some implicit promises that the EA community has made to the external world. If that wasn’t the case, I think it would indeed make sense to deprioritize basically all the non-longtermist things.”

The structure of Effective Altruism rhetoric

The researcher Mollie Gleiberman explains the EA’s “strategic ambiguity”: “EA has multiple discourses running simultaneously, using the same terminology to mean different things depending on the target audience. The most important aspect of this double rhetoric, however, is not that it maintains two distinct arenas of understanding, but that it also serves as a credibility bridge between them, across which movement recruits (and, increasingly, the general public) are led in incremental steps from the less controversial position to the far more radical position.”

When Effective Altruists talked in public about “doing good,” “helping others,” “caring about the world,” and pursuing “the most impact,” the public understanding was that it meant eliminating global poverty and helping the needy and vulnerable. Inward, “doing good” and the “most pressing problems” were understood as working to mainstream core EA ideas like extinction from unaligned AI.

In the communication with “core EAs,” “the initial focus on global poverty is explained as merely an example used to illustrate the concept – not the actual cause endorsed by most EAs.”

Jonas Vollmer has been involved with EA since 2012 and held positions of considerable influence in terms of allocating funding (EA Foundation/CLR Fund, CEA EA Funds). In 2018, he candidly explained when asked about his EA organization “Raising for Effective Giving” (REG): “REG prioritizes long-term future causes, it’s just much easier to fundraise for poverty charities.”

The entire point was to identify whatever messaging works best to produce the outcomes that movement founders, thought leaders, and funders actually wished to see. It was all about marketing to outsiders.

The “Funnel Mode”

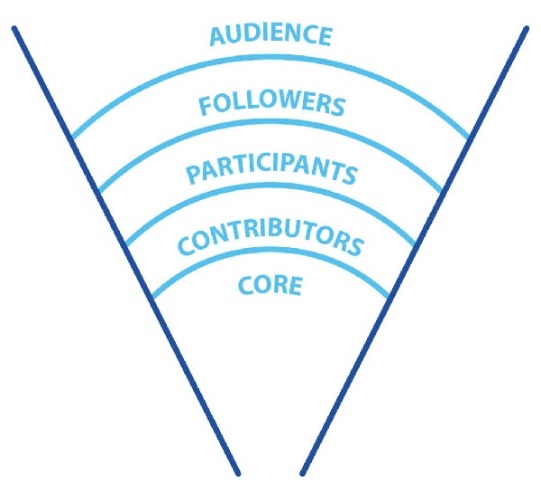

According to the Centre for Effective Altruism, “When describing the target audience of our projects, it is useful to have labels for different parts of the community.”

The levels are: Audience, followers, participants, contributors, core, and leadership.

In 2018, in a post entitled The Funnel Mode, CEA elaborated that “Different parts of CEA operate to bring people into different parts of the funnel.”

The Centre for Effective Altruism: The Funnel Mode.

At first, CEA concentrated outreach on the top of the funnel, through extensive popular media coverage, including MacAskill’s Quartz column and book, ‘Doing Good Better,’ Singer’s TED talk, and Singer’s ‘The Most Good You Can Do.’ The idea was to create a broad base of poverty focused, grassroots Effective Altruists to help maintain momentum and legitimacy, and act as an initial entry point to the funnel, from which members sympathetic to core aims could be recruited.

The 2017 edition of the movement’s annual survey of participants (conducted by the EA organization Rethink Charity) noted that this is a common trajectory: “New EAs are typically attracted to poverty relief as a top cause initially, but subsequently branch out after exploring other EA cause areas. An extension of this line of thinking credits increased familiarity with EA for making AI more palatable as a cause area. In other words, the top of the EA outreach funnel is most relatable to newcomers (poverty), while cause areas toward the bottom of the funnel (AI) seem more appealing with time and further exposure.”

According to the Center for Effective Altruism, that’s the ideal route. It wrote in 2018: “Trying to get a few people all the way through the funnel is more important than getting every person to the next stage.”

The magnitude and implications of Effective Altruism, says Gleiberman, “cannot be grasped until people are willing to look at the evidence beyond EA’s glossy front cover, and see what activities and aims the EA movement actually prioritizes, how funding is actually distributed, whose agenda is actually pursued, and whose interests are actually served.”

Key takeaways

– Core EA

In the Public-facing/grassroots EAs (audience, followers, participants):

- The main focus is effective giving à la Peter Singer.

- The main cause area is global health, targeting the ‘distant poor’ in developing countries.

- The donors support organizations doing direct anti-poverty work.

In the Core/highly engaged EAs (contributors, core, leadership):

- The main focus is x-risk/ longtermism à la Nick Bostrom and Eliezer Yudkowsky.

- The main cause areas are x-risk, AI-safety, ‘global priorities research,’ and EA movement-building.

- The donors support highly-engaged EAs to build career capital, boost their productivity, and/or start new EA organizations; research; policy-making/agenda setting.

– Core EA’s policy-making

In “2023: The Year of AI Panic,” I discussed the Effective Altruism movement’s growing influence in the US (on Joe Biden’s AI order), the UK (influencing Rishi Sunak’s AI agenda), and the EU AI Act (x-risk lobbyists’ celebration).

More details can be found in this rundown of how “The AI Doomers have infiltrated Washington” and how “AI doomsayers funded by billionaires ramp up lobbying.” The broader landscape is detailed in “The Ultimate Guide to ‘AI Existential Risk’ Ecosystem.”

Two things you should know about EA’s influence campaign:

- AI Safety organizations constantly examine how to target “human extinction from AI” and “AI moratorium” messages based on political party affiliation, age group, gender, educational level, field of work, and residency. In “The AI Panic Campaign – part 2,” I explained that “framing AI in extreme terms is intended to motivate policymakers to adopt stringent rules.”

- The lobbying goal includes pervasive surveillance and criminalization of AI development. Effective Altruists lobby governments to “establish a strict licensing regime, clamp down on open-source models, and impose civil and criminal liability on developers.”

With AI doomers intensifying their attacks on the open-source community, it becomes clear that this group’s “doing good” is other groups’ nightmare.

– Effective Altruism was a Trojan horse

It’s now evident that “sending money to Africa,” as Eliezer Yudkowsky acknowledged, was never the “actual plot.” Or, as Will MacAskill wrote in 2012, “alleviating global poverty is dwarfed by existential risk mitigation.” The Effective Altruism founders planned – from day one – to mislead donors and new members in order to build the movement’s brand and community.

Its core leaders prioritized the x-risk agenda, and considered global poverty alleviation only as an initial step toward converting new recruits to longtermism/x-risk, which also happened to be how they, themselves, convinced more people to help them become rich.

This needs to be investigated further.

Gleiberman observes that “The movement clearly prioritizes ‘longtermism’/AI-safety/x-risk, but still wishes to benefit from the credibility that global poverty-focused EA brings.” We now know it was a PR strategy all along. So, no. They do not deserve this kind of credibility.

Dr. Nirit Weiss-Blatt, Ph.D. (@DrTechlash), is a communication researcher and author of “The TECHLASH and Tech Crisis Communication” book and “AI Panic” newsletter.

Filed Under: ai, ai doomerism, bait and switch, effective altruism, existential risk, x-risk